Human Cognition and the Chinese Room

Today I’m going to use a famous philosophical thought experiment to help elucidate one of the most significant problems in cognitive science. Before I get into it though, let me set the stage. There are various competing theories of cognition in the field of cognitive science. Some explain human cognition and thought through the manipulation of symbols, others explain it though a web of interconnected networks. While there are many different approaches, there is one thing all these different theories have in common. And that is that they are trying to explain how human thought and intelligence emerges out of the interaction of very stupid processes. How is it that an assortment of firing neurons can lead to consciousness? To explain the relevance of this question, just think about a computer. Your computer can calculate math equations faster than you can ever dream of. It can handily beat you in chess. You ask a question to your search engine and it pops up with a ton of relevant answers. There are even programs that have been around since the early nineties (maybe earlier?) that can have full fledged conversations with you. Now, none of us confuse these programs for real people, and none of us think our computers are intelligent, at least not in the way we ascribe to humans. They are not aware, they are not conscious. They are just running programs. Some input comes in, calculations are done, output is spit out.

But what is so different about the way computers function and how we function? Neuroscience has broken down human cognition and laid bare the simple and stupid processes that lie at the heart of it. You’re walking down the street, and suddenly you hear someone yell, “Watch out! Duck!” You quickly glance up and see something flying towards your head. You duck and narrowly avoid a baseball that zooms past your head. Seems like an intelligent action right? You heard someone say something, you understood their words and directed your attention based on them. What you saw next you interpreted as danger to you so you moved out of the way. At every step of the process you were aware, and conscious, and acted intelligently. You understood what was happening. But when we look at what actually happens inside your head, it’s not at all so straight forward. The entire process of hearing, and seeing and moving, is all governed by disparate modules in the brain which communicate by sending electrical signals back and forth. In essence you have millions of tiny computers running in your head, processing input and spitting out output, and there is no one place in the brain where all this information comes together so YOU can become aware of it…so how are you?

Imagine this. Imagine we built a robot with all the advanced technology we have. The robot has the most advanced computer acting as its brain, and it’s got itself a whole humanoid body, with ears and eyes that actually work. The ears take in sound waves and map them phonetically and compare them to dictionary entries and have an incredibly complex series of actions that are initiated when it hears certain phrases. It has cameras for eyes and is able to pass the information seen with the cameras to a central processing area that controls movement. When it hears “watch out! Duck!” it maps the phrase, looks up what to do (when you hear “watch out”, look up), the central processor receives an instruction to direct the eyes upward, the cameras swivel and records a fast approaching object. This information is sent to the central processor, which has instructions to move the body when in the path of fast approaching objects. This is all highly complex, but nothing in there is really a stretch. But here is the question. In both situations, the same thing happened. You and the robot acted in exactly the same way. But how many of you would say the robot was intelligent? That the robot understood what was going on? Few of us. And yet, what is so different about us? Why is it that you have this privileged understanding that a robot can’t have when underneath the hood, we both have a bunch of stupid little computers mindlessly running their little programs (in our case neurons firing according to strict rules)?

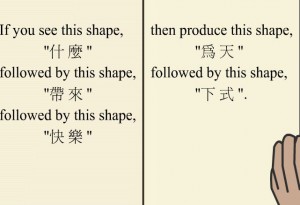

This is the problem that John Serle was contemplating when he creating the Chinese Room thought experiment. Searle was criticizing people who were trying to develop artificial intelligence by pointing out that creating a robot that could interact with the world in seemingly intelligent ways, would not necessarily make it conscious, it would not be aware. If a machine could convincingly simulate intelligent conversation, it wouldn’t actually “understand” that conversation because it was just manipulating symbols based on predefined rules. Here is how he put it. Imagine there is a man in a room. Through one end of the room slips of paper are passed in. These slips of paper contain Chinese characters (questions or statements). The man in the room has filing cabinets full of instructions that allow him to match up the Chinese characters passed in the room, and they give him further instructions to create a new set of characters and pass them out of the room. The end result is that this process stimulates a conversation in Chinese with the people outside the room. Make this process as complicated as you want. To account for the fact that the same sentence can often lead to varying responses, have the slips of paper contain a serial number that acts as the “current state of the system”, causing the man in the room to go to a different filing cabinet based on the current state, and thus produce a different response. The point is that even though a conversation is occurring, the man the room in no way understands Chinese. Seemingly intelligent behavior does not necessitate real intelligence.

There are a few responses to this. One of which states that while the man himself is does not understand Chinese, the room as a whole does (man, cabinets, paper, pens, and all). This seems like a pretty silly response, how is it exactly that a room can be intelligent? Can anyone honestly suggest the room understands anything? But Searle plays along and says, okay, try this: take the entire contents of the room, all the instructions, and have the man memorize every single one (for the sake of the experiment, imagine this can be done). Now the entire system is inside the man. People pass him pieces of paper, he writes meaningless symbols on other pieces of paper based on the rules he memorized, and hands them back. To other people, it would seem that this man understands Chinese, but this is obviously not the case.

A more substantial reply to Searle invokes the fact that language is something that is understood by embodied agents, not by rooms that pieces of paper are slipped into. It is the complex social nature of interaction with other people and things in the world that language emerges from. These replies say to embed the Chinese room in a robot and give it sensory organs and a way to interact with other people and that who is to say it’s not intelligent. Well, Searle, that’s who. He argues that the behavior is seemingly intelligent, but since we know that under the hood what is going on is meaningless symbol manipulation there is no true representation or intelligence. Whether the robot is interacting or not doesn’t change that fact.

A more substantial reply to Searle invokes the fact that language is something that is understood by embodied agents, not by rooms that pieces of paper are slipped into. It is the complex social nature of interaction with other people and things in the world that language emerges from. These replies say to embed the Chinese room in a robot and give it sensory organs and a way to interact with other people and that who is to say it’s not intelligent. Well, Searle, that’s who. He argues that the behavior is seemingly intelligent, but since we know that under the hood what is going on is meaningless symbol manipulation there is no true representation or intelligence. Whether the robot is interacting or not doesn’t change that fact.

It seems we’ve hit an impasse. We can’t figure out how to grant a machine intelligence, as simply manipulating symbols does not bestow intelligence, does not make them meanginful. And yet these same arguments seem to hold up against us intelligent humans, and we have to begin wondering at this point, what exactly are the criteria (the necessary and sufficient conditions) for understanding language? We can’t just say that humans understand language because we are conscious…because that just begs the question, how does consciousness arise from simple biological processes? So what do you think? What are some ways for us to get out of this problem? How can we account for our human ability to understand language, to ‘represent’ the meaning of words as cognitive scientists would say? The implications of this are far reaching, both for the ability to construct intelligent robotic systems, but more importantly, for understanding the very fundamental nature of human cognition. My next post will explore some ways of thinking about this question that I find more promising. In the mean time, what are your thoughts? How would you reply to Searle’s Chinese Room thought experiment?

Read the follow up post on language acquisition and development!

11 Responses

11:04 am

I define intelligence as information processing, and consciousness as the feelings and sensations, and as such I don’t have a problem with any of this.

Searle’s room is intelligent. As is the robot. But not conscious.

I think consciousness also involves understanding, so I’m going to ponder it out loud.

The native speaker, when given the Chinese symbol for ‘car’ actually thinks of a car. If, while learning English, the Chinese person is given the literal English word ‘car’ and then is given a description of a car in Chinese, they will recognize that ‘car’ and ‘che’ refer to the same thing. The room cannot make this connection by definition.

To help clarify what I mean by ‘understands Chinese’ it is helpful to think of actual concrete linguistic interactions. I immediately thought of a question, which would break the Chinese Room or any equivalent.

“Do you prefer green or purple?” I’ll assume the room is held to an honesty standard. (Assumption convenient but not necessary.) Given this, the rules of the room by definition have to include a statement like, “If you see these symbols, if you prefer green write (x) and if you prefer purple write (y).” Even with just this example, the instructions unavoidably include avenues to understanding or decoding the symbols, or else it won’t be able to converse fluently in Chinese.

That is, you can’t write a Chinese Room program without implicitly including some understanding somewhere along the line. The definition is self-contradictory.

Which is unfortunate. I was hoping to work on pinning down understanding vis a vis consciousness.

12:30 pm

Hi Alrenous, did you have a chance look at my follow up post to this?

http://cognitivephilosophy.net/consciousness/language-acquisition-and-development/

I explore in much more detail the nature of language and meaning. I don’t do a full exploration of the relationship between language and consciousness, but I think it’s a start at thinking through some of the issues to do with representation and mental states. I have a very different take on what language is, how words get their meaning, and how this all relates to consciousness, and I’ll gladly discuss further after you give that post a go!

11:11 am

Sorry for the double post. I was wrong about thinking I was done.

Essentially, the Chinese room is a communication device, like a telephone. The Chinese speaker in your very handy picture is speaking with the writer of the program, though the medium of the Englishman in the room. Except that the writer has planned out the conversation in advance and actually manged to think up all possibilities, it is actually a completely normal conversation between two fluent Chinese understander-ers.

9:33 pm

I found this experiment will lead to some tricky thinking.

If an English citizen has been raise and live in an environment only in English. Then, at the age of 14 is parents move to China and he start to learn Chinese. At the age of 25, he speaks and write perfect Chinese and become Chinese citizen.

We could in fact argue that this man is not real Chinese. After all, his brain has been expose to English during his childhood. Also is parents and grand-parents were English speakers.

As a proof, we could even take a brain scan and show that not the same brain part are active when he listen to Chinese.

We all know that this is not true. This man do understand the real nature of things just like his best Chinese friends!

Going back to this test, I found it is just like the example I give. How you can make sure a human really understand what he say? Are we always fully aware of what we answer?

The answer is no! We try our best most of the time. There are simple questions like what is your name in which we give the answer without even thinking further. When we tell our name back, we don’t start thinking deeply what is the meaning of our name. How does our name has influence our life and so on.

Also, to the question.. How are you? Even if the answer would be.. I am having a bad day because of… We would answer most of the time: fine! We know it may not be appropriate to start telling our life story.

In that case, we answer something because that’s what most people do. We understand it is just to be polite. But, are we really understanding? If so, why people are asking that question? They know the answer and know you probably know that they don’t really care about how are you!

I think that no matter how smart a robot will be. We will treat them different. We are the one creating the concept to distinguish if a robot could be Intelligent?

We view and understand our world through our knowledge and experience in life. We (humans) are looking as a human point of view.

I think, we should focus more on how we would like our world to be. For sure a robot will be more smart than each one of us. What would be is place in our world? I know that’s another topic. 😉

11:45 pm

Hi F-Cycles, I think you might have slightly misunderstood the relevance of the thought experiment. It might make a little more sense after you read my follow up post: http://cognitivephilosophy.net/consciousness/language-acquisition-and-development/. I discuss what I think is a more fruitful way to think about language and meaning. But at root this question isn’t necessarily about robots or understanding the meanings of words. It’s about consciousness and mental states more generally. How is it that subjective experience can arise from cells passing electro-chemical signals back and forth? Language is a special case of that more general problem. Solving the problem would give is amazing insight into one of the oldest questions in philosophy, and now in cognitive science. And yes, it would have significant impact on the project and possibility of artificial intelligence.

A couple things worth noting:

“We could in fact argue that this man is not real Chinese. After all, his brain has been expose to English during his childhood. Also is parents and grand-parents were English speakers.

As a proof, we could even take a brain scan and show that not the same brain part are active when he listen to Chinese.”

Well, you’re asserting the result of this experiment so that you can argue against it. But that’s an assumption. This is an empirical question. I don’t know the answer to it, but it could well be that the person’s brain would light up in the same way as a native chinese speaker.

But more importantly, even if you’re right, your conclusion doesn’t follow. The question isn’t whether two people mean the exact same thing when they use a word, I actually think it’s likely that this is never the case. Language is too associative that two people are ever in the same mental state when thinking about a concept. What the question is about is how any meaning can arise in the first place. We have a conscious subjective understanding associated with words and sentences and concepts. When a computer program converses, it does not. A bunch of 0s and 1s move up and down a bunch of circuits, and this corresponds to calculations, the output of which is more 0s and 1s, and those are converted into acoustic distortions that are emanated from speakers. For us human beings, those acoustic distortions, once they reach our ears and are converted into electrical signals, somehow mean something to us. Why? How? That’s what the above though experiment is really about.

“Are we always fully aware of what we answer?

The answer is no!”

Again, I think you might be interpreting my post in a way it wasn’t intended. Yes, often times we are not consciously attentive or aware when doing certain things. You talk about two slightly different uses of these terms. One is that we often responded immediately without thinking. The other is that we don’t tend to contemplate the deeper meanings and associations of individual words when we say them. These points are both true. But the broader point is that you could if you wanted to. You could stop after giving your name to someone, and think about the deep meaning of your name. Human beings have this possibility, a computer does not. Similarly, if you told someone “fine” after they asked how you were, and they replied back, “no, seriously, I’d really like to know how you’re doing”. You, being a conscious person, can think about your life, and respond with words, all of which you understand the meaning of. A computer, doesn’t “think” (in the sense of being consciously aware, of having subjective experience), it just computes. The thought experiment proposes an argument against the idea that a computer would understand language, would have any conscious experience, in virtue of it’s computational symbol manipulation processes. But what makes the thought experiment even more interesting, is that it’s wrapped up in these questions about what makes brains so special.

There are the sort of surface level definitions of “understanding” and “meaning” that we use in every day life, and there are these more fundamental ideas that philosophers and cognitive scientists talk about when they use those words. Just another quirky feature of human language that we don’t have four different words for these disparate concepts!

12:55 pm

Hi Greg, in fact I was pointing out assumption and conclusion that does not translate what I think. But, I try to point out elements which if we apply to group of humans would be consider racist. I re-read your article and read also “http://cognitivephilosophy.net/consciousness/language-acquisition-and-development/”. My previous comment was to point out that some people would always argue that others are different.

I am going back to this post, where you ask “what exactly are the criteria (the necessary and sufficient conditions) for understanding language? We can’t just say that humans understand language because we are conscious…because that just begs the question, how does consciousness arise from simple biological processes? So what do you think?”

I think that the consciousness arise from a certain level of complexity. Which translate into a certain number of functions interconnected all together. If we take language only, in fact words has a meaning which translate into different things. These things refer to other things and so on. If we think about let’s say a “dog”, each of us might think of a different dog we saw or we own.

So, to your question how does consciousness arise from simple biological processes? I will answer my guess is that there is probably a simple process which is similar at different scales and need to grow to a certain level before it get complex enough so we can qualify so.

About what exactly are the criteria for understanding a language? I am currently trying to learn a third language. I am just starting… with few books on my own. Sometime, I happen to go in the Country where it’s spoken. I live there and almost no one speak the two other languages I know. Despite the fact that I know few words, I achieve to understand things base on people actions. I watch and see if the pace is fast or slow, if happy or sad. If I hear a sound often, I will start to repeat it. That sounds won’t have any meaning, but I will keep saying it so I can try to master the pronunciation and hopefully get more interaction with people. It’s harder when we are adults, because kids would have their social interaction through games with adults. All sorts of simple games, and we are reward by children smiles. This lead to your conclusion of: http://cognitivephilosophy.net/consciousness/language-acquisition-and-development/ (social interaction) When you hear someone say “No! Hot stove!”. Even if you don’t understand the sounds, the intonation for “No” will be so loud and serious that you will understand “stop, something bad, make people angry”.

Even with less than 80 words I master, I do have some understanding. I feel I am doing bad, after all, most of the time, I understand nothing. But, with lot’s of concentration, I could understand more and more. Over the time, I start to feel what I have learn is useful and slowly I know more, understand more.

I think what it’s different between the machine and biological processes, is that the biological processes is way more flexible. But, the biological processes is also very slow! I found out that learning 6 new words per day is probably the best I could do. After learning 120 words, I start to confuse these new words all together. So, obviously the process of learning and understanding is not straightforward. But, over the time and efforts the learning process will succeed.

If I go back to AI, I think we tend too much to build system that do something useful. Like a machine that will analyze text to give answers. I think we should master how we can build complexity and have a system raise by himself. Emotions seem also to play a big rule in all that process.

3:38 pm

“If I go back to AI, I think we tend too much to build system that do something useful. Like a machine that will analyze text to give answers. I think we should master how we can build complexity and have a system raise by himself. Emotions seem also to play a big rule in all that process.”

I mostly agree with your assessment here. We tend to build computers and machines to do highly specific tasks, and we build in all the assumptions and programming they need to complete those tasks. When we try to build more general machines that can interact with their environment, we still build in many of these assumptions. So the machines we build are really bad at understanding context and dealing with what we call the Frame problem. They can’t really handle error knowledge or self guided error detection and error correction. I think you’re right about emotions. But I do think that emotions can be replicated functionally. Some very convincing ideas in biology talk about emotions as sort heuristics for behavior. System states that cause system wide changes to many disparate functions.

I also agree with you about the level of complexity, but I don’t think complexity is sufficient. As I point out, and as you discuss as well, certain types of interactions are also necessary for language understanding to arise. I think that consciousness also arises from certain types of interaction. Both internal interactions of the brain, interactions of the brain and body, and interactions of the body with the environment. That’s certainly not a final answer, but I think it provides a more promising approach than certain computationalist approaches and connectionist approaches.

4:08 pm

I do think also that emotions can be replicated. However, I think emotions are also a learning process in our life. We can improve our emotion response in life, although some fundamental reactions would be harder (but not impossible) to change.

I agree with you that the interactions are necessary. In fact, as I mention in my blog, I was moving out of computer projects I use to do to focus on what make me enthusiast about computers: Human Cognition through AI.

1:35 pm

Not exactly the whole answer, but I think I’d add a few more criteria. First, I’d add the need for an interior life. Allow for the entity to be constantly going over various unconnected points of data and arriving at new conclusions, making new connections, etc. Certainly our “man in the room” model can do that, but only because it contains the man, who can do this already on his own. The system as a whole doesn’t really exemplify this, because th data the man is processing is unintelligible to him, and not really a part of his language of thought. Consider that some claim that you don’t really know another language until you dream in it. A bit of a loose definition, but it makes sense, since such an act Illustrates an unconscious assimilation of the language beyond merely memorization and recall.

The other points I’d add are imprecision and innacurracy. Perhaps these sound like bad things, but we depend on them even down the level of our DNA (won’t bore with details, but it’s how we become the genetic individuals we are, how our immune system adapts to infinite challenges, etc). It is this sort of “looseness” in thought that allows for metaphor, for instance.

Imprecision allows more than a simple 1:1 result to a given question. We are capable of infinitely expanding our capabilities simply by having so many different responses to a given situation/question, any of which may be perfectly acceptable, and which may in many cases not be dependent on a given “state of the system”.

Innacurracy may sound like a universally bad thing for intelligence, but the occasional mental slip can be incredibly useful. Getting something wrong and never realizing the error is clearly bad, but think of all the additional understanding you can gain from an error corrected, that you would never have had if you never made he mistake. A thought process that progresses linearly through a completely logical course may get you somewhere, but it’s narrow and unoriginal. Sure, there are search engines that learn from mistakes, and that even use imprecision to suggest a broad array of options, but they dont really get the benefit of accidental wanderings down lengthy wrong pathways, gleaning wisdom along the way.

Just the thoughts of a young doc who doesn’t really think often about this stuff, but thought I’d throw in my .02

5:39 pm

Thanks for your comments David. I’m actually with you on the imprecision and inaccuracy criteria. I think agent directed error guided behavior is one of the most important things about cognition, and most theories in cognitive science do a terrible job of accounting for it. I also think there’s an important sort of indeterminacy in our representations of the world that allows for a more fluid interaction between the agent and the environment than most classic theories of cognition can account for.

The inner life point is interesting. In the Chinese Room thought experiment we have a person who already has an inner life, and we’re pressing a point about understanding language. So it’s presupposing inner life to make a point. But at root, the Chinese Room is fundamentally about what having an inner life consists of. Searle was arguing against computational models of mind that said that cognition consisted in symbol manipulation. It wasn’t just about language, but about mental life in general.

I present what I think is a way out of the problem (in regards to language acquisition) in my follow up post (link in the post above), and I think that my solution has the benefit of also offering an alternative way to think about consciousness and cognition more generally. I mention in the follow up post that it certainly doesn’t answer all our questions about human cognition, but I think it offers a firmer ground for thinking through problems.

4:50 am

Yes sum of the parts of the room including the man WOULD be conscious and there is no reason for it not to be. If you think it’s absurd because a collection of inanimate objects can’t be conscious, consider that the brain is just made of a bunch of neurons that fire electricity. If you think it’s absurd because you can’t have a living thing inside one mind, think again: The brain is made of cells, each of which are living. You could give each of the cells a huge mini-brain to make them conscious and it still wouldn’t make our brain unconscious!

Therefore the Chinese room argument is flawed.

And in any case, if one is to believe Chinese room is proof that strong AI is impossible, then you’d have a paradox: Even if one is to simulate a brain perfectly it would be not intelligent. Even if we built a fake brain out of wires (arranged the same way as real human neurons in a brain) it would not be intelligent. Yet it is functionally the same as a human brain. And Searle resolves this paradox by believing in something really strange:

“Searle is adamant that “human mental phenomena [are] dependent on actual physical–chemical properties of actual human brains.”

If our neurons were made of plant cells, or man-made cells, or man-made neuron-simulators, would Searle and his supporters really think that these humans (with identically arranged brains but just made of a different material or chemicals) would be non-conscious? THAT is absurd.